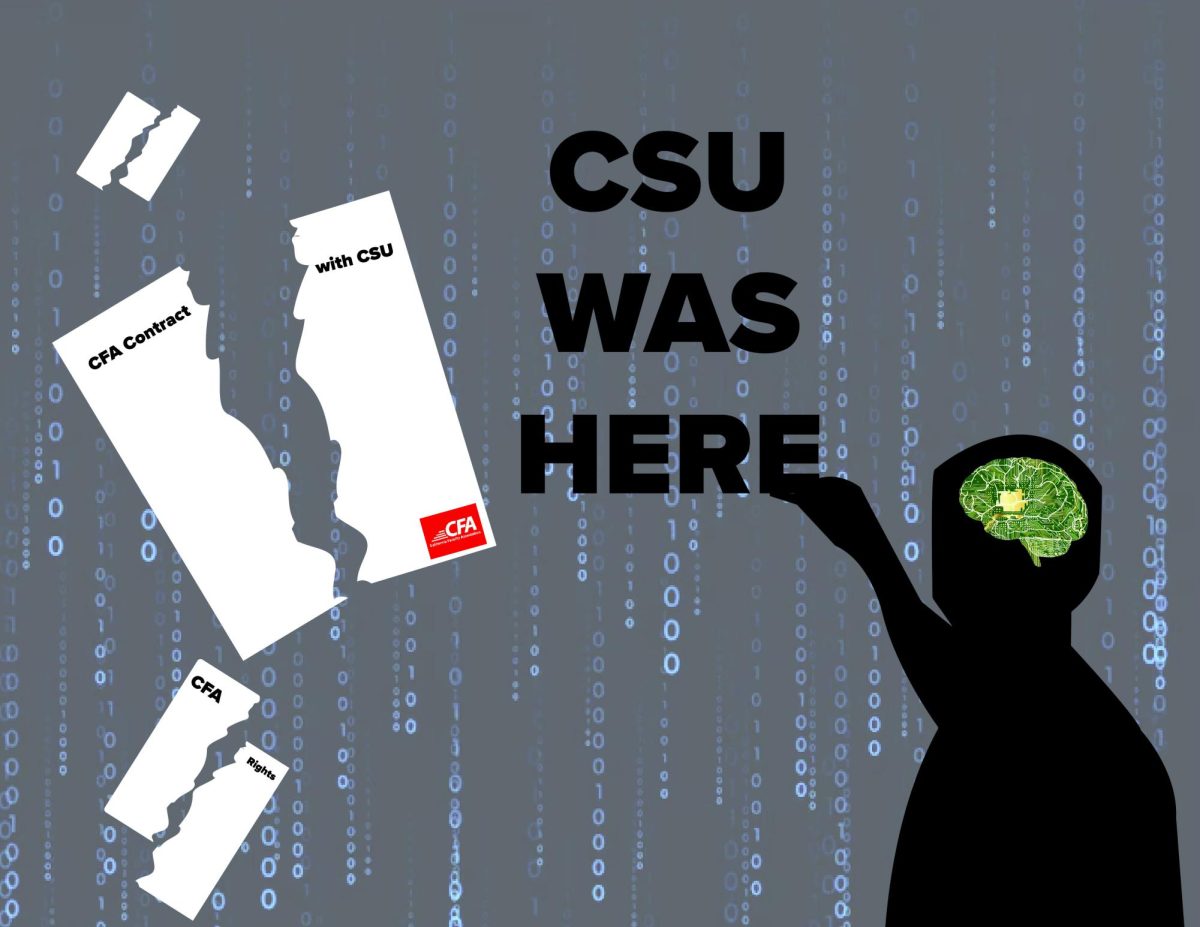

The California Faculty Association (CFA) has filed an Unfair Practice Charge with the California Public Employees Board, claiming that administrators violated contract law by failing to consult faculty before launching a major partnership with artificial intelligence company OpenAI — which is known for its chatbot, ChatGPT.

CFA believes that the CSU’s shift into a fully autonomous university system without faculty input violates labor law.

The union also raised further concerns regarding AI technology’s inaccuracies, unclear data storage practices, environmental setbacks, racist and sexist mirroring of “larger culture” and possible use of human rights exploitation.

The union said the CSU system avoided its obligation to “meet and confer” with the unionized faculty despite pleas to bargain, “leaving faculty out of the decision-making process,” as CFA wrote in a statement.

“We want to have a voice on how it’s used,” said Brian Dolber, the chief of AI for the CFA.

“If there’s a shift in policy from management, it’s customary and expected that, when there’s a collective bargaining unit that would be impacted by that change in policy, management has meetings with representatives of the union to figure out what those practices should be and if they’re acceptable,” Dolber said.

The CSU system wasn’t interested in discussing the AI initiative as they walked out of negotiations last month and refused to come back into the negotiation room the following week.

“They have to consult us on that, and you know that they know what the answer is going to be … they know that we’re going to have concerns about how it gets implemented, and the resources that are being spent on it,” Dolber said.

The reason for this behavior was obvious to Dolber and the CFA.

“The CSU is interested in — and I’ll say more specifically — Chancellor Millie Garcia is interested in building what she’s calling an AI-driven or AI-powered university,” Dolber said.

“To create an AI-powered university, to me, is to say that the work of faculty and other workers at the university is being devalued,” he added.

SDSU was clear on moving towards an autonomous university system with their $1.3 million facial recognition-equipped camera package powered by AI, which Dolber said was “a major threat to both faculty and students.”

Ryan Serpico, the Deputy Director of AI and Automation at media conglomerate Hearst, said Hearst has a similar licensing agreement with OpenAI.

“We are giving them access to our content in order to be able to train on it, and in exchange, we are getting cash,” Serpico said. “I can imagine why OpenAI and other labs want to have access to any academic systems and data. Whether it be course materials or videos.”

One of CFA’s biggest concerns is intellectual property.

Dolber said that if AI is learning from student and faculty content, there is no way to know how that information will be used and no one would be compensated for it.

“All of our knowledge then becomes grist for the mill … we’re building robots that will destroy us,” Dolber said.

While the state proposed funding cuts to the university, Dolber said a major concern is their “taking of an additional $18 million and giving it to Silicon Valley to create tools that will ultimately displace work,” which wasn’t an isolated incident.

Before the partnership with OpenAI was announced, CFA was already preparing for AI, as they passed a resolution for a new collective bargaining agreement article governing the use of AI, stating that this is a part of a larger pattern of bypassing labor oversight.

The resolution stated, “The CSU and individual campuses regularly sign contracts with tech companies, implement new learning technologies, and impose new technological ‘solutions,’ including ‘smart,’ ‘AI-powered,’ or ‘data-driven’ technologies, without consulting CFA.”

There is a major concern within the union that this could lead to a series of labor law violations. If AI causes a “speed up,” it would mean larger classes and less oversight for instructors, which would violate workload limits.

Surveillance is a huge concern of the CFA as well. Coupled with the recent presidential administration’s actions, limiting the content of what is being taught at universities, Dolber believes that AI initiatives like the one that the CSU system has with OpenAI could lead to “ideological policing.”

“There’s a threat to faculty and students who are on visas and who may have political critiques of the government and the administration,” Dolber said. “I think the ability to draw on that information or those conversations, through what may be happening, and through online portions of our classes … is a massive problem.”

CFA also expresses concerns over academic integrity, exploitation of low-wage labor, dead internet and climate consequences.

Dolber said that students and staff do not have to normalize this shift and this technology.

“Technological developments are not inevitable … we have agency in shaping what technologies emerge, how they emerge, how they’re deployed, how they’re used, what their effects are,” Dolber said.

CFA aims to take a step back in order to fully understand what AI is going to mean for workers and the future of the world.

CFA’s stance on AI is pretty simple.

“The idea that we are going to just accept that this is the future of our world and that we have no say in it — it’s like, we do have a say in it. And that’s what collective bargaining does,” Dolber said.